Diffusion models have revolutionized image generation and editing,

producing state-of-the-art results in conditioned and unconditioned image synthesis.

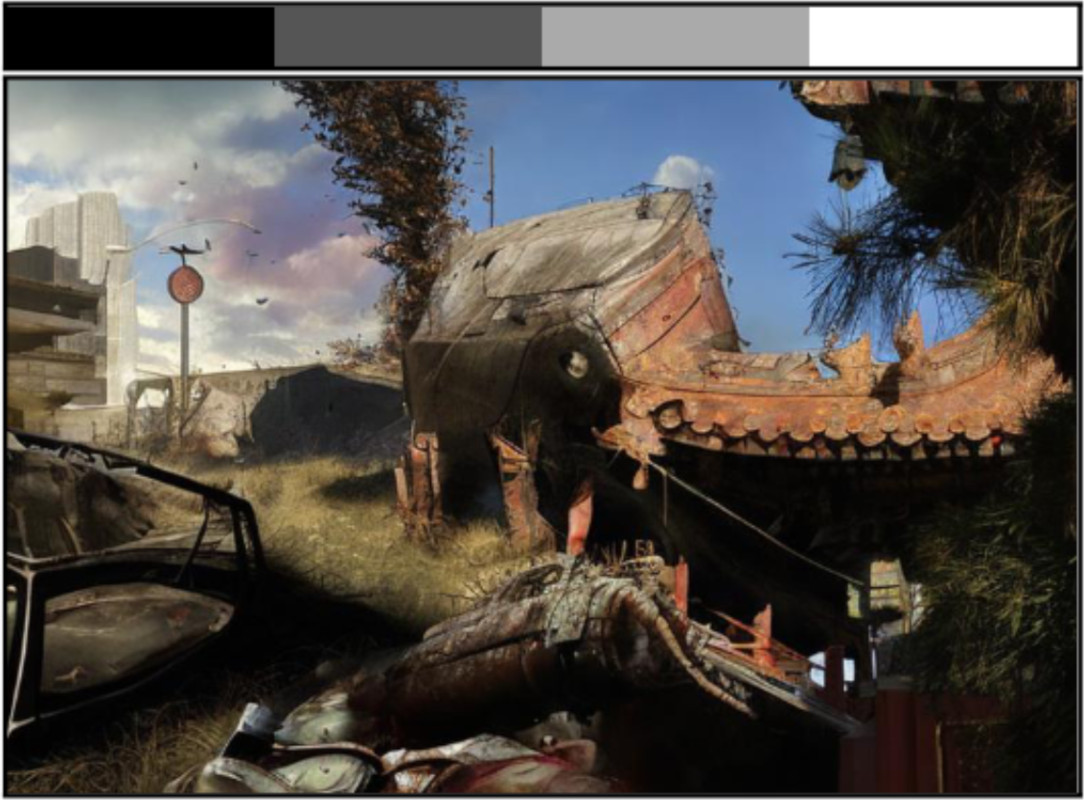

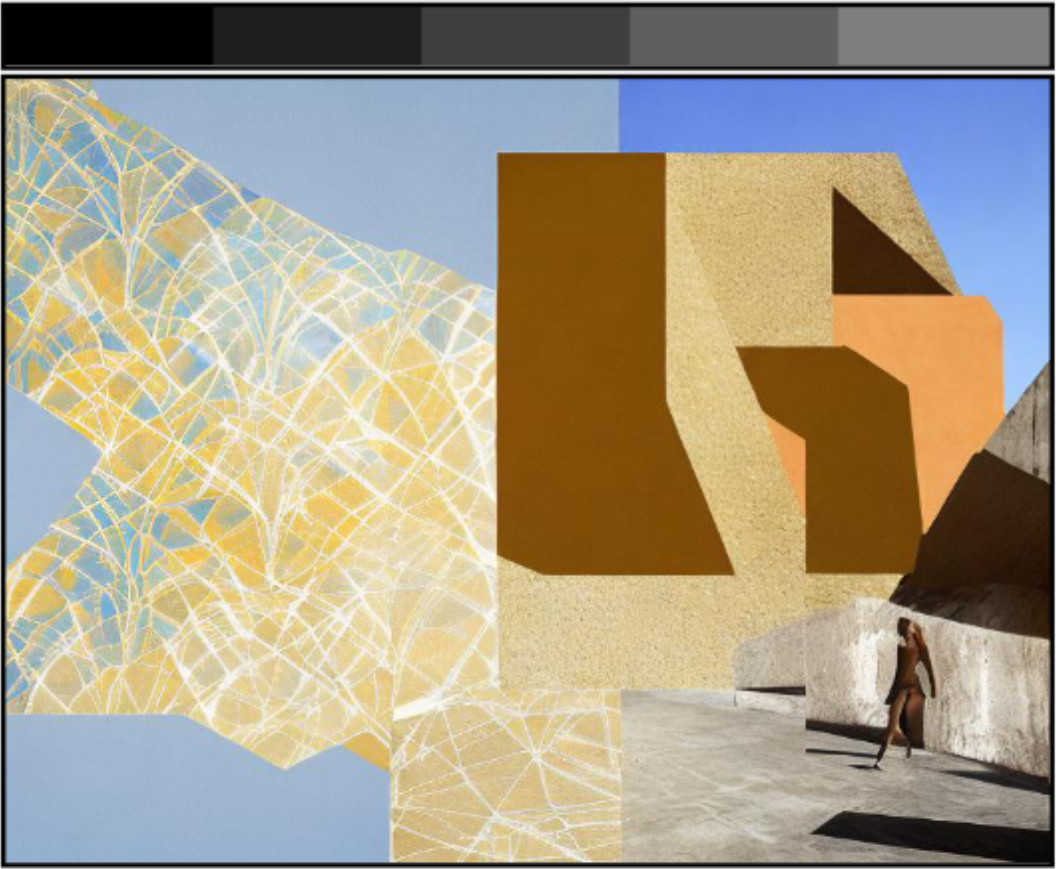

While current techniques enable user control over the degree of change in an image edit, the

controllability is limited to global changes over an entire edited region.

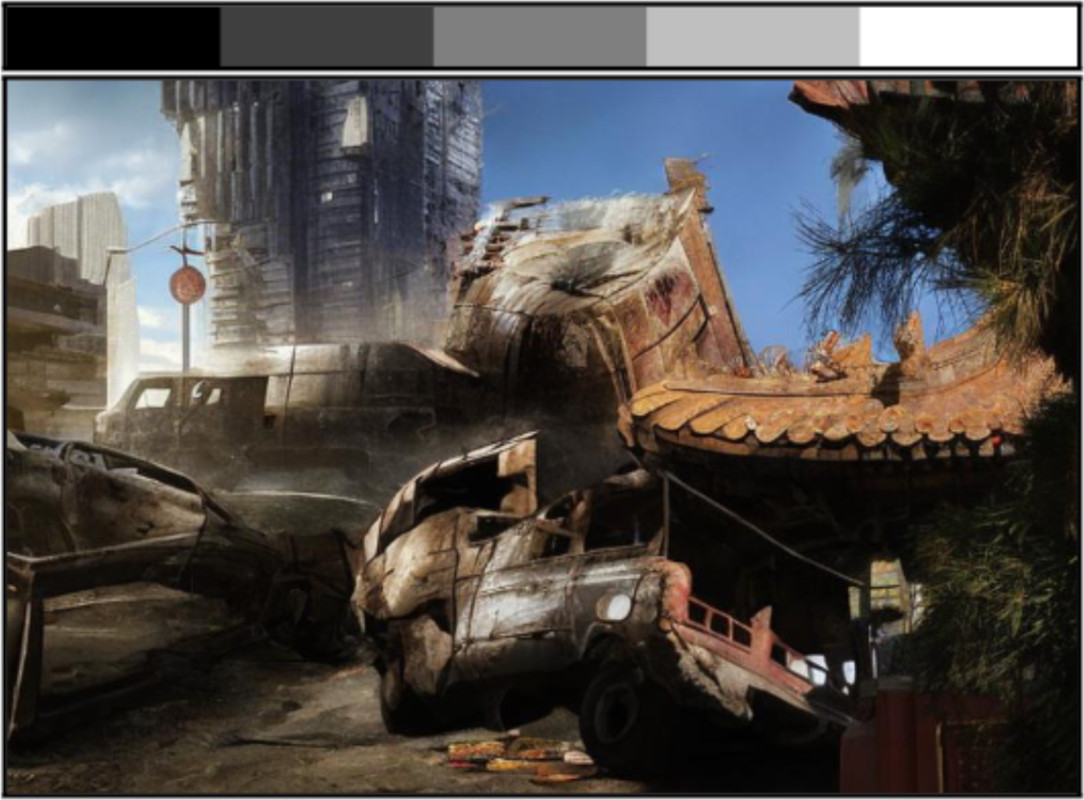

This paper introduces a novel framework

that enables

customization of the amount of change per pixel or per image region.

Our framework can be integrated into any existing diffusion model, enhancing it with this

capability.

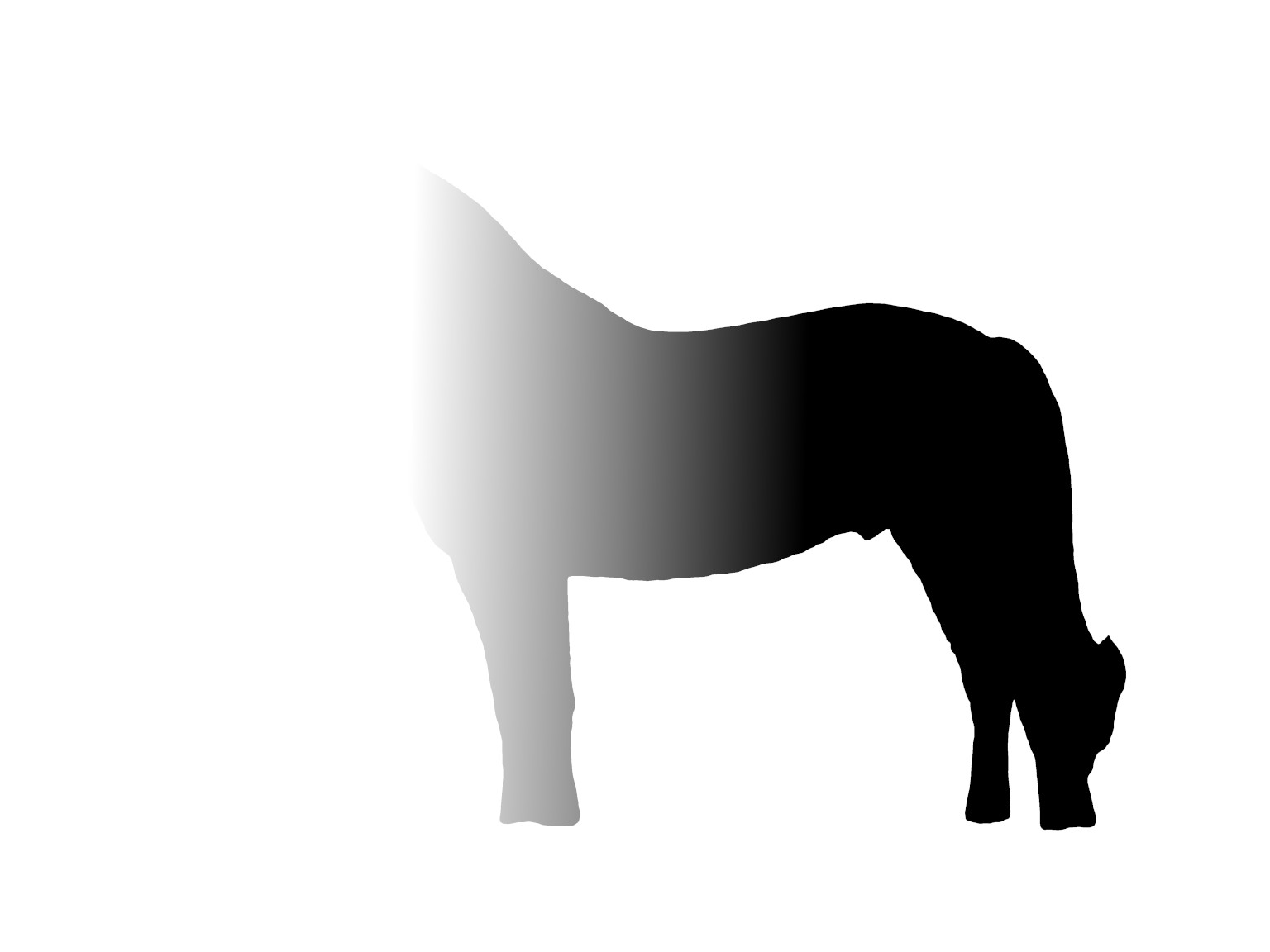

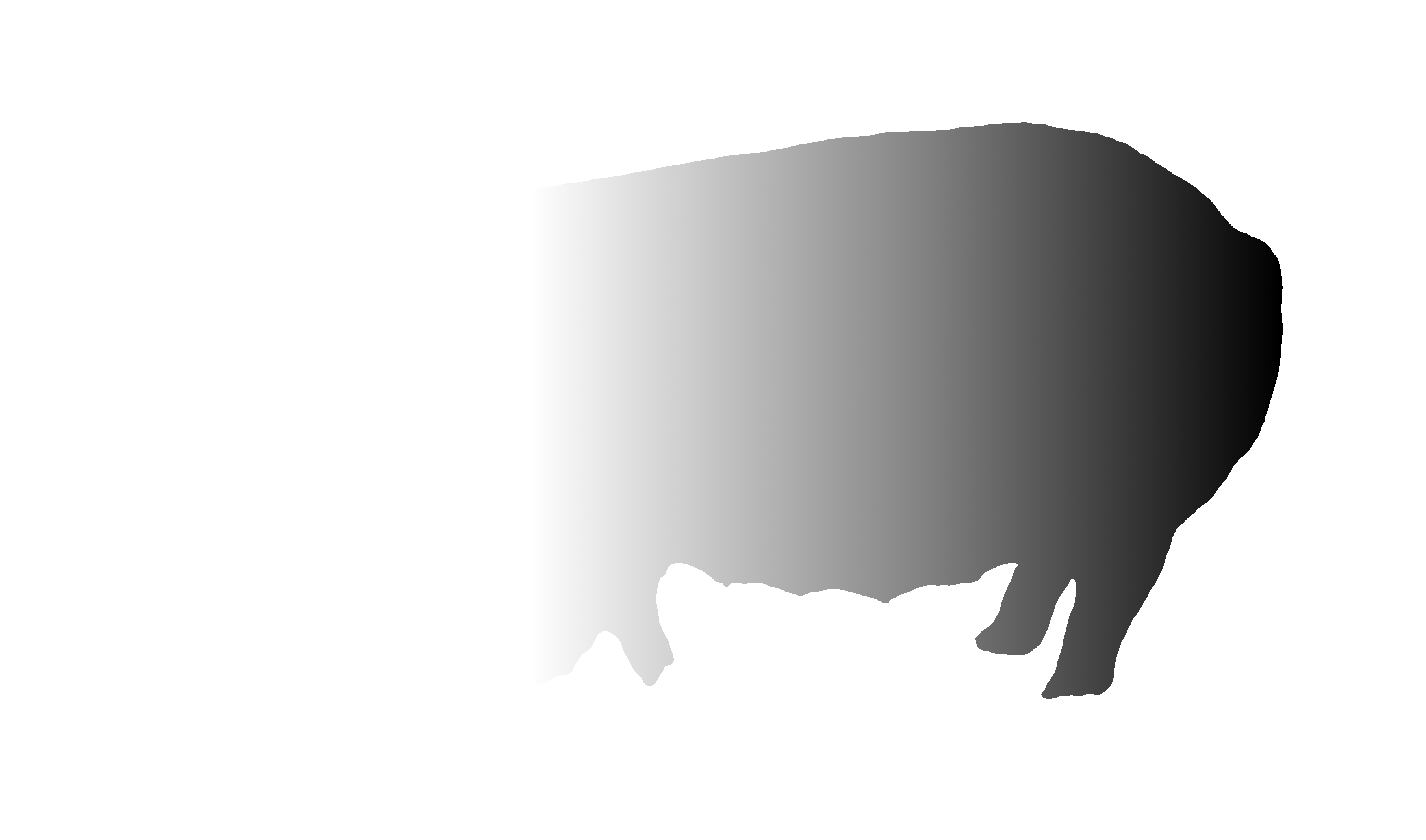

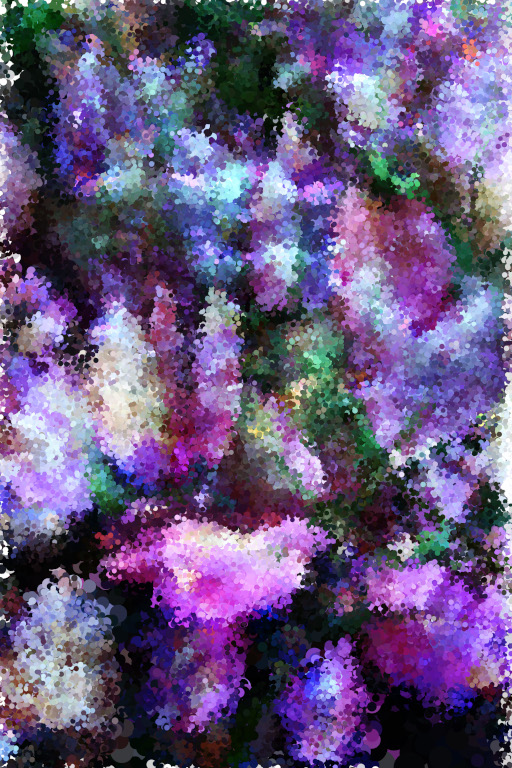

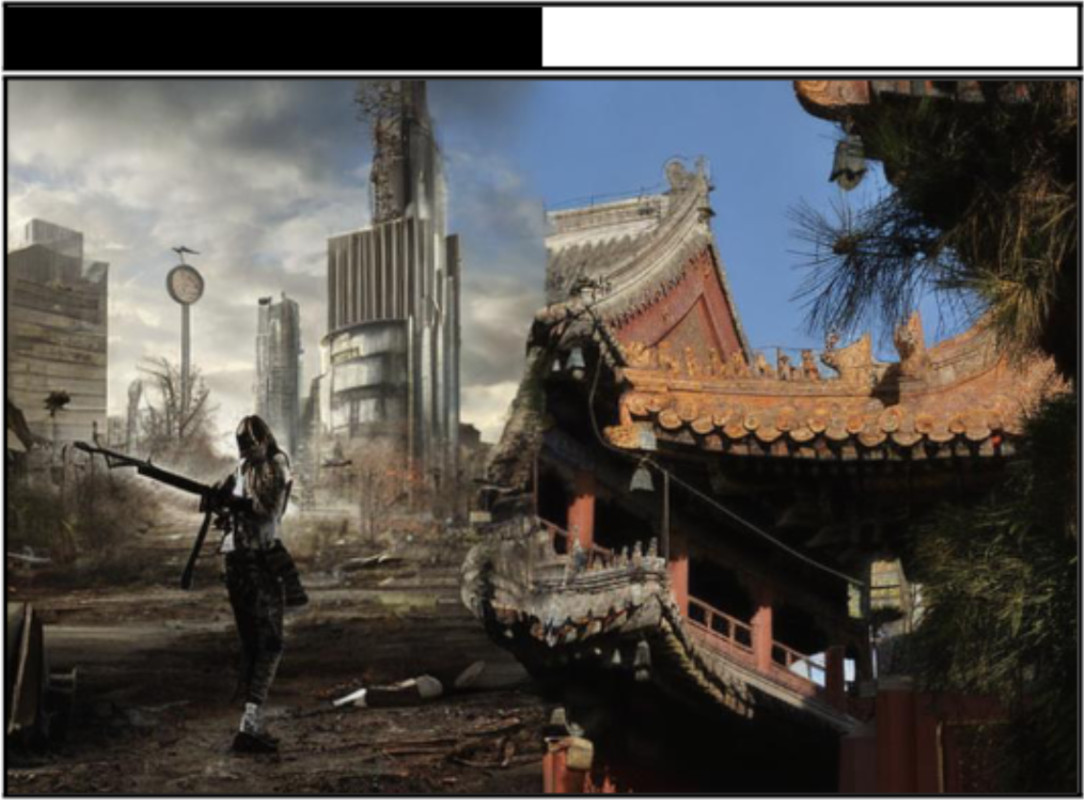

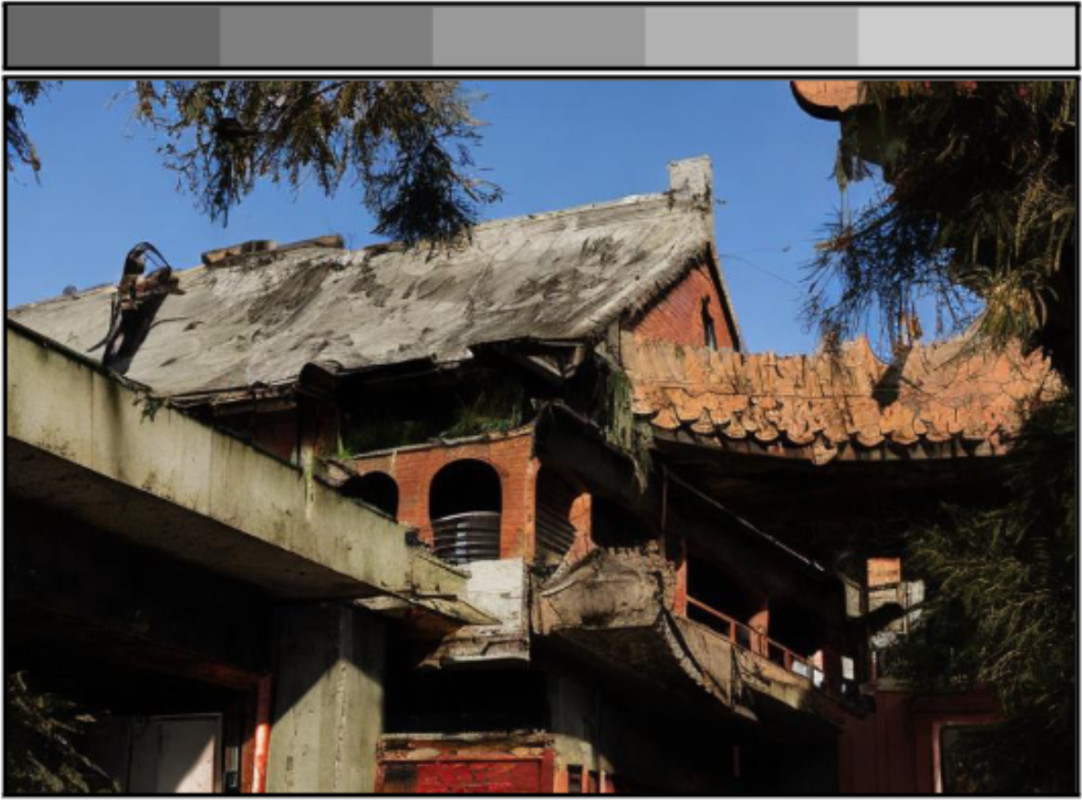

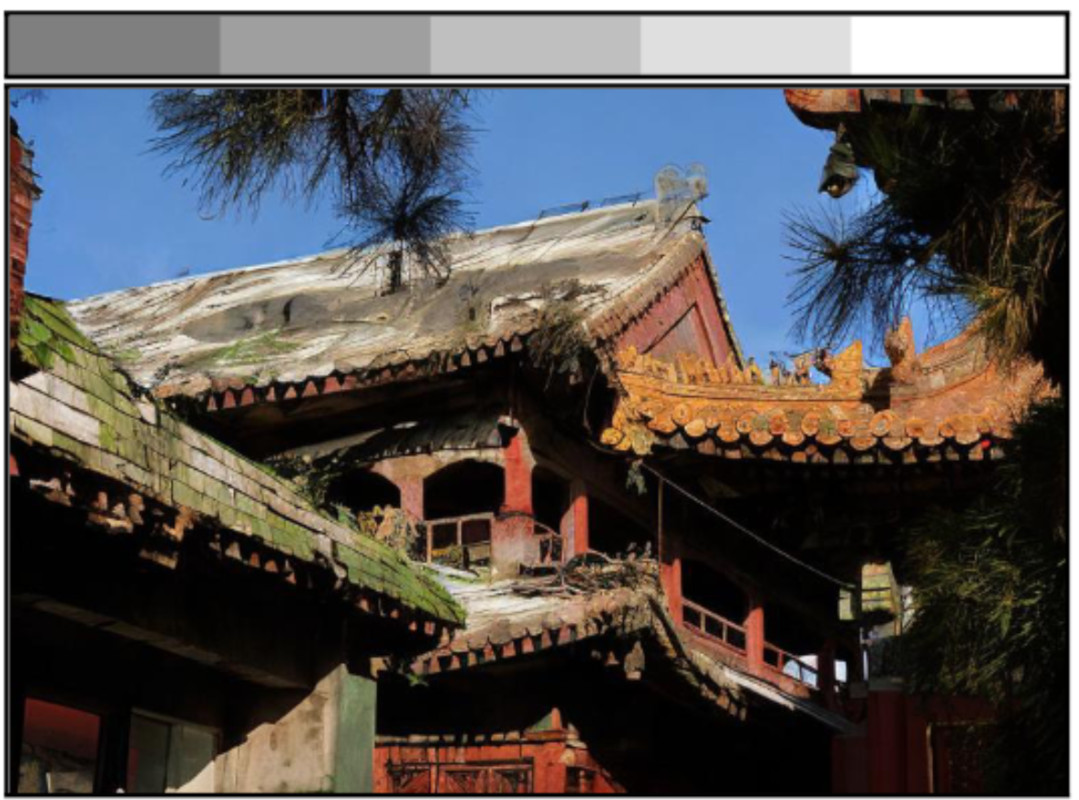

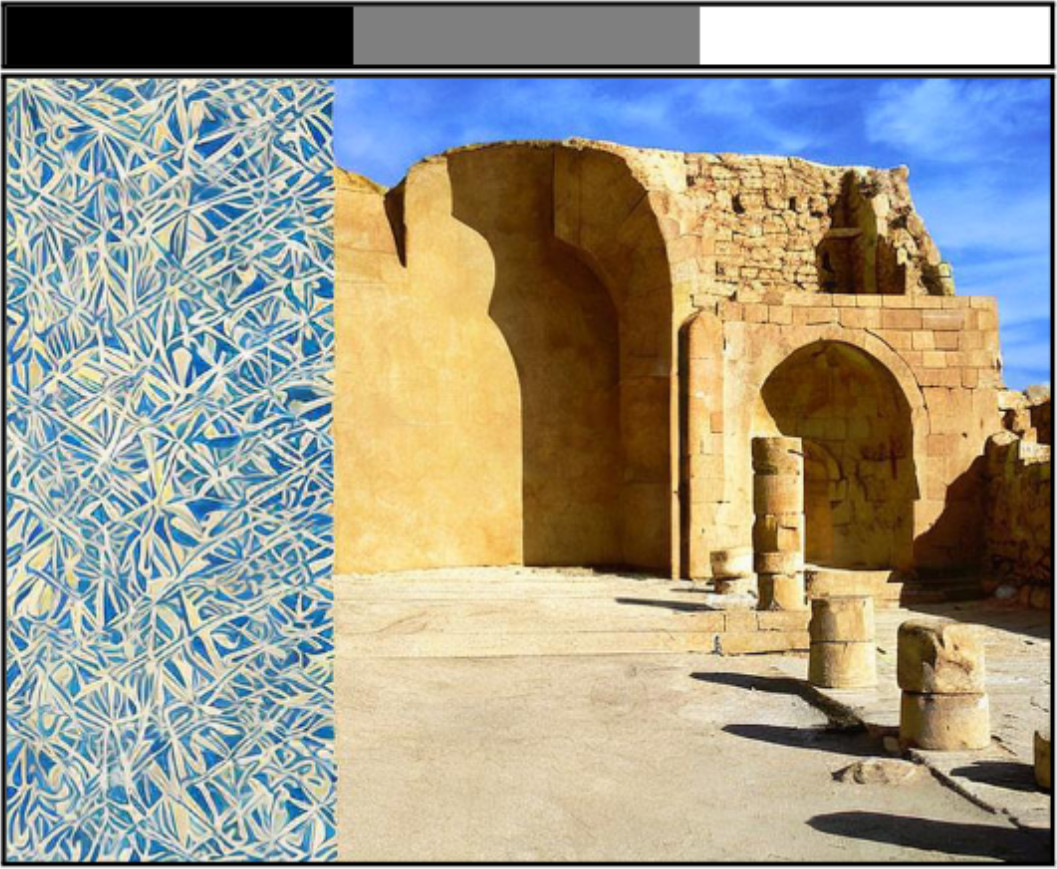

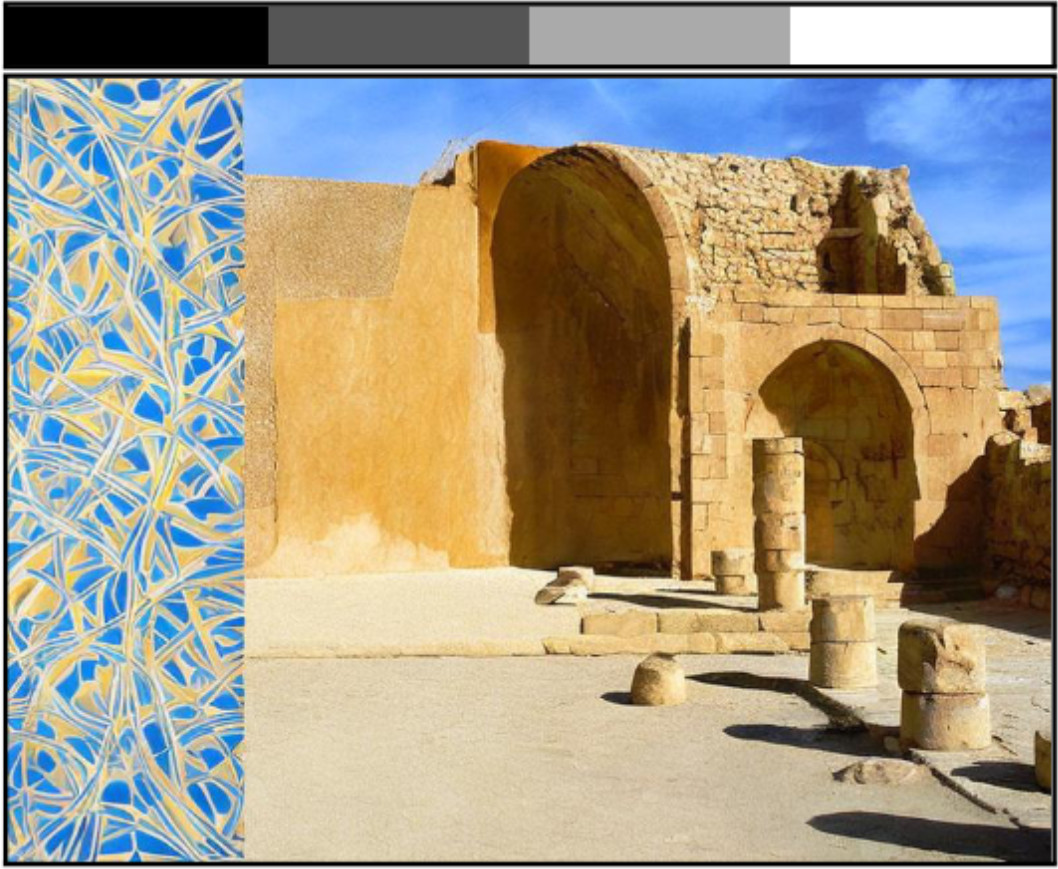

Such granular control on the quantity of change opens up a diverse array of new editing

capabilities, such as control of the extent to which individual objects are modified, or

the ability to introduce gradual spatial changes.

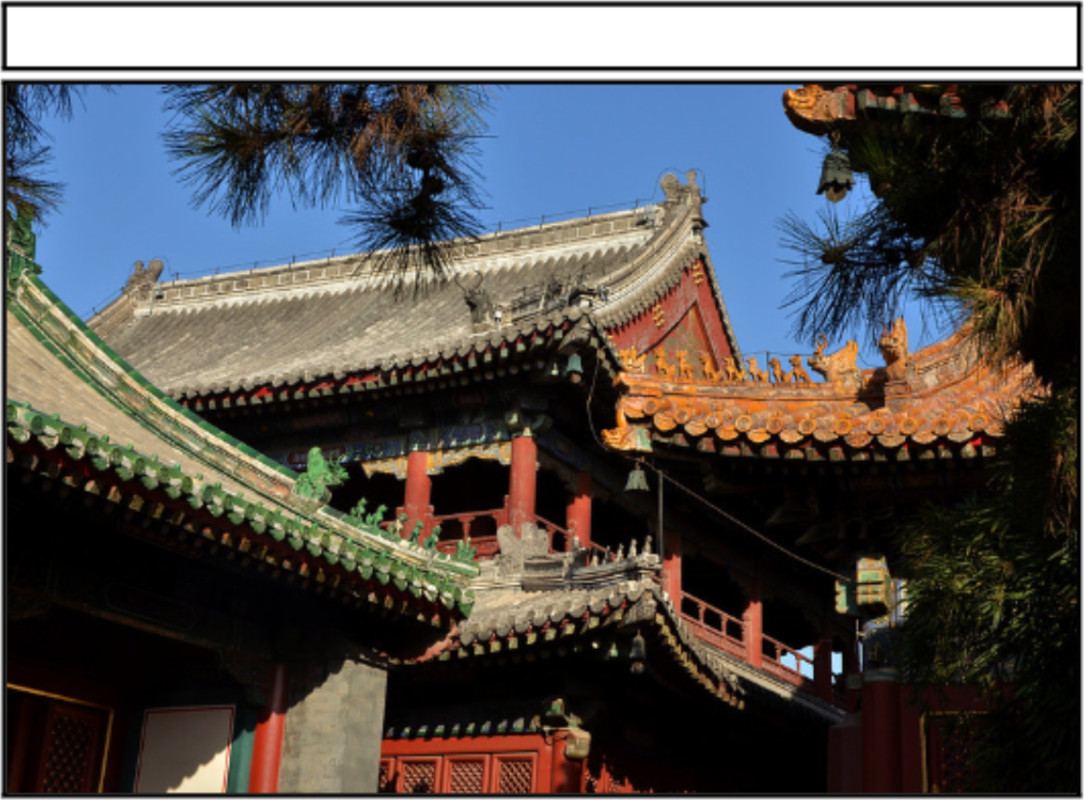

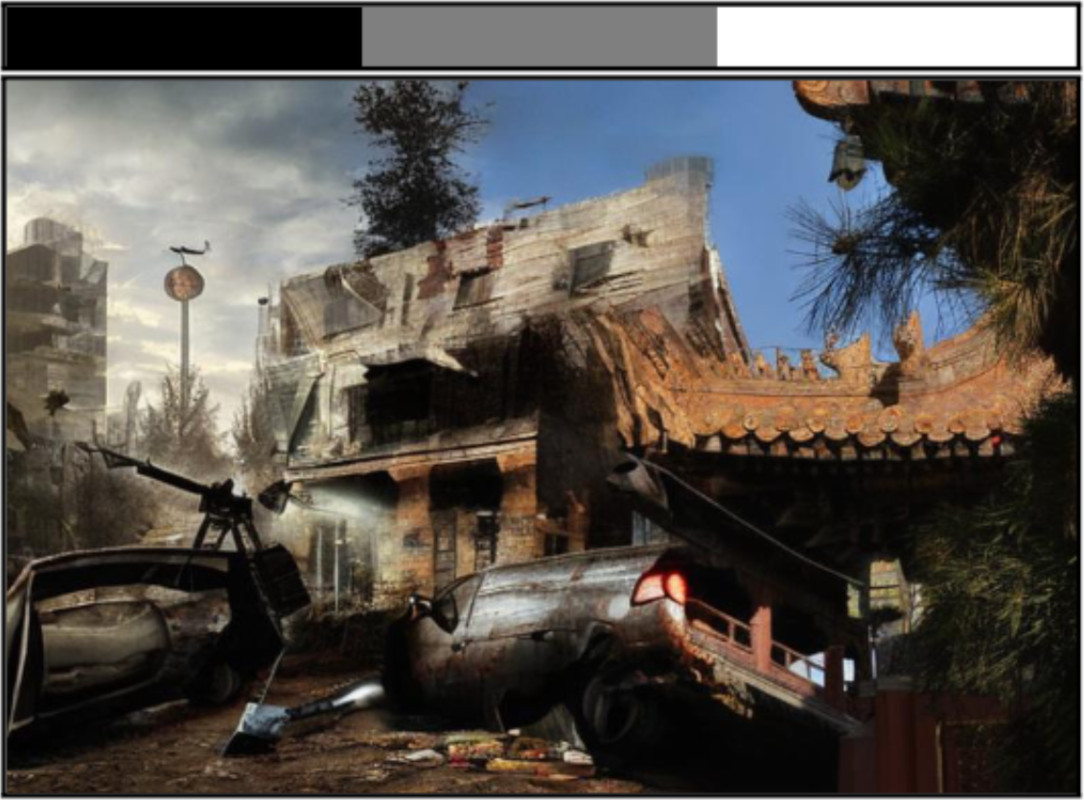

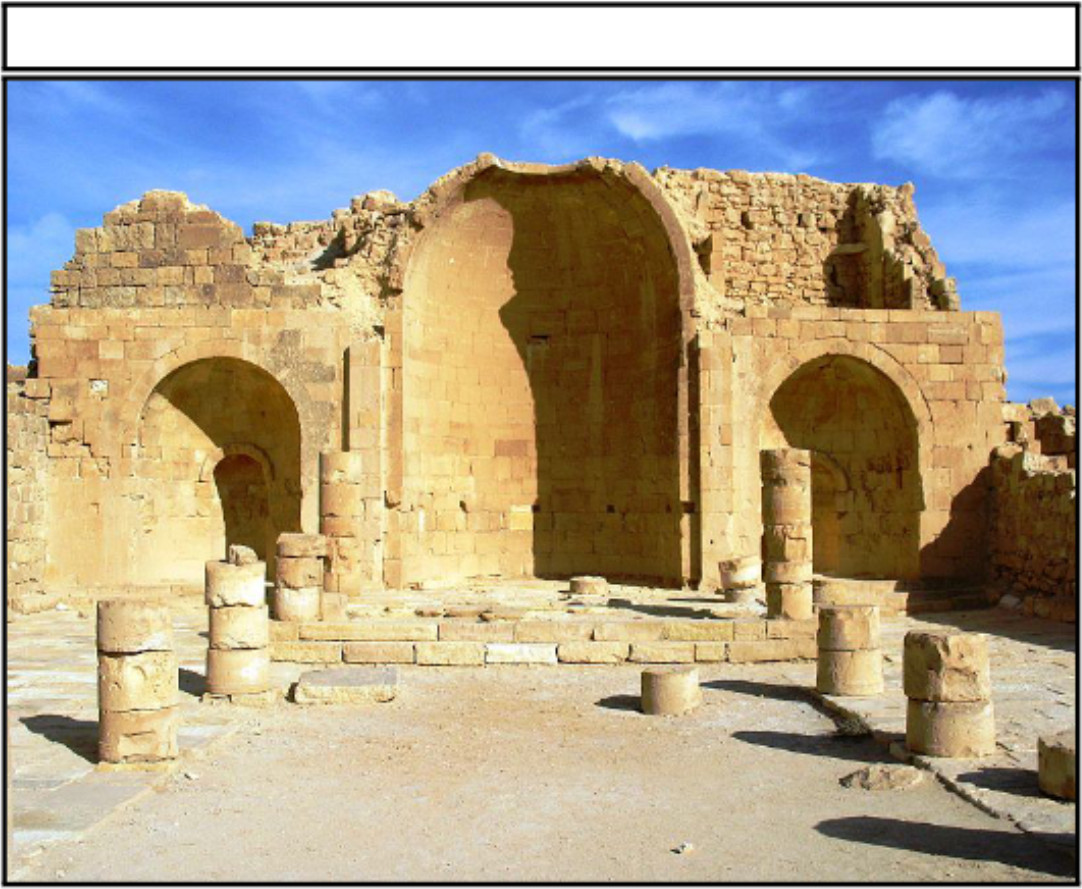

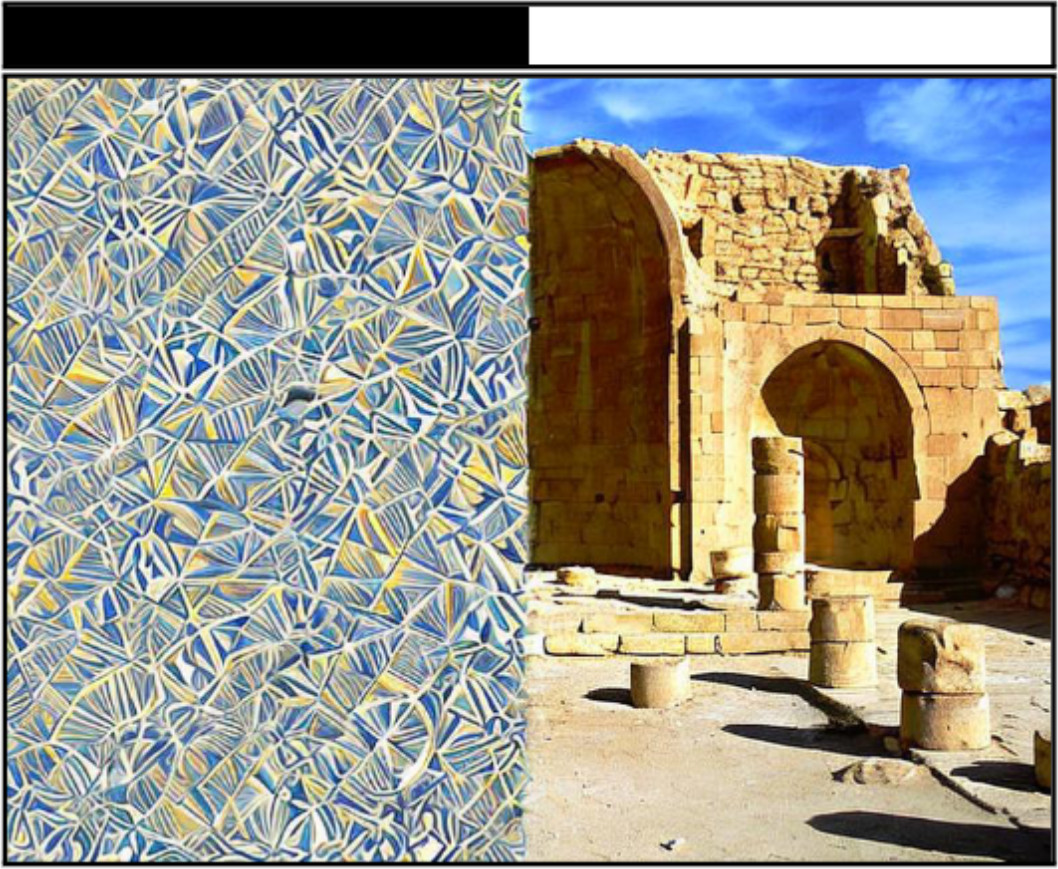

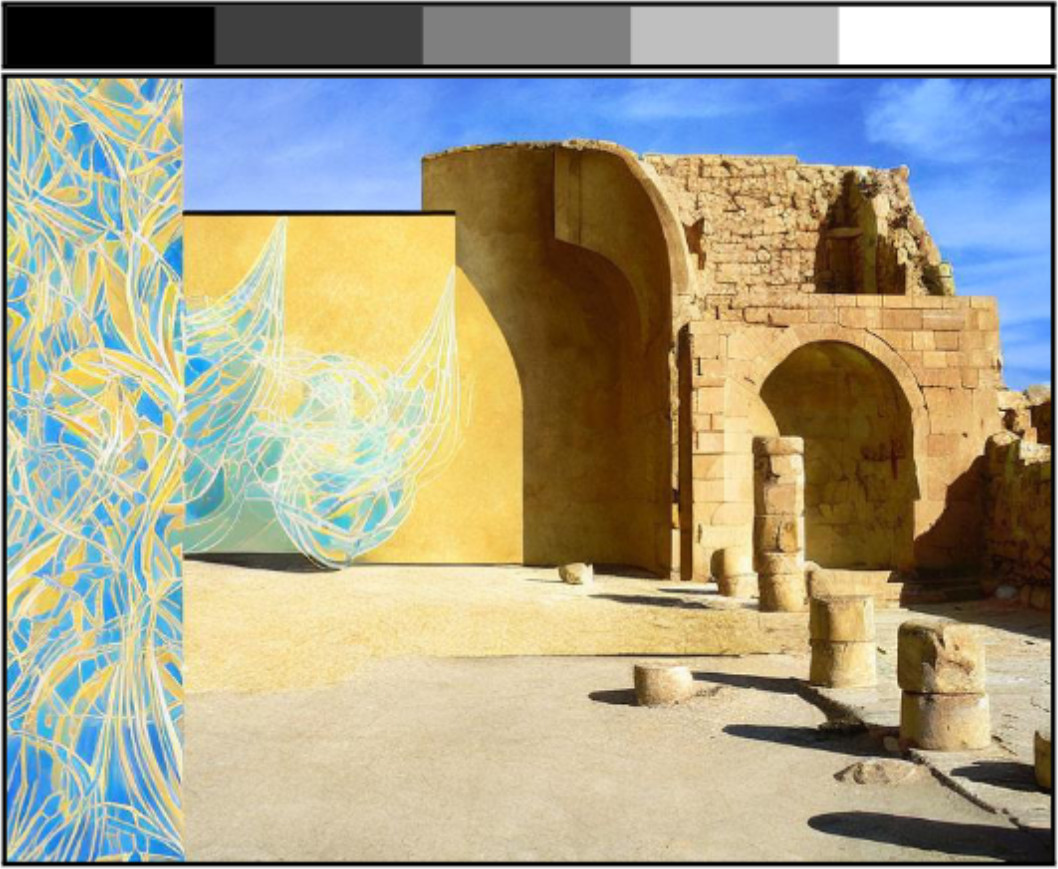

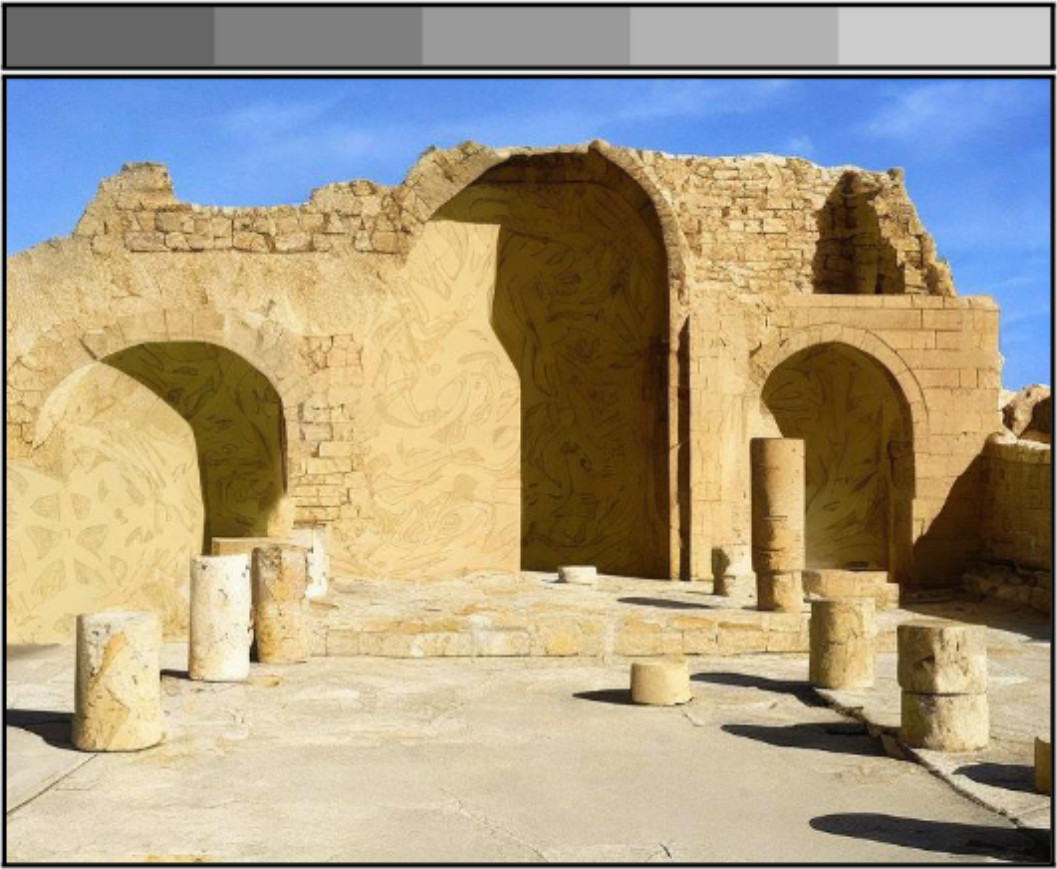

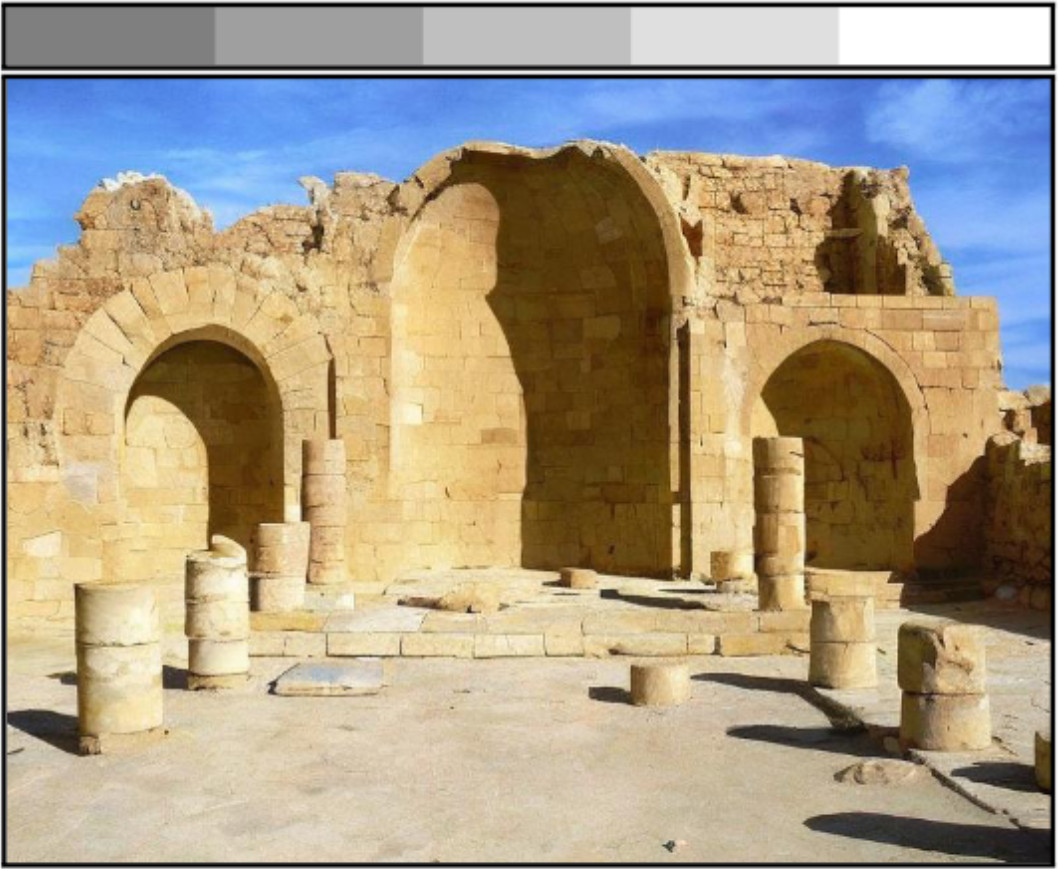

Furthermore, we showcase the framework's effectiveness in soft-inpainting—the completion of

portions of an image while subtly adjusting the surrounding areas to ensure seamless

integration.

Additionally, we introduce a new tool for exploring the effects of different change quantities.

Our framework operates solely during inference, requiring no model training or fine-tuning. We

demonstrate our method with the current open state-of-the-art models, and validate it via both

quantitative and qualitative comparisons, and a user study.